On March 23, 2016, Microsoft unleashed on the internet an artificial intelligence (AI) attached to a twitter account. It was called a chatbot and it went by the name of “Tay.”

“Tay” was an experiment in AI to learn how this nonhuman software robot would interact with the twittersphere and converse with “real” people on twitter. The AI was written in a way to mimic what it “heard” on twitter, and learn as it went along, theoretically getting smarter and more humanlike with each subsequent interaction. (Turing test anyone?)

How wonderful is the world we live in when we can now create fake twitter accounts that interact with us? (Sarcasm intended.)

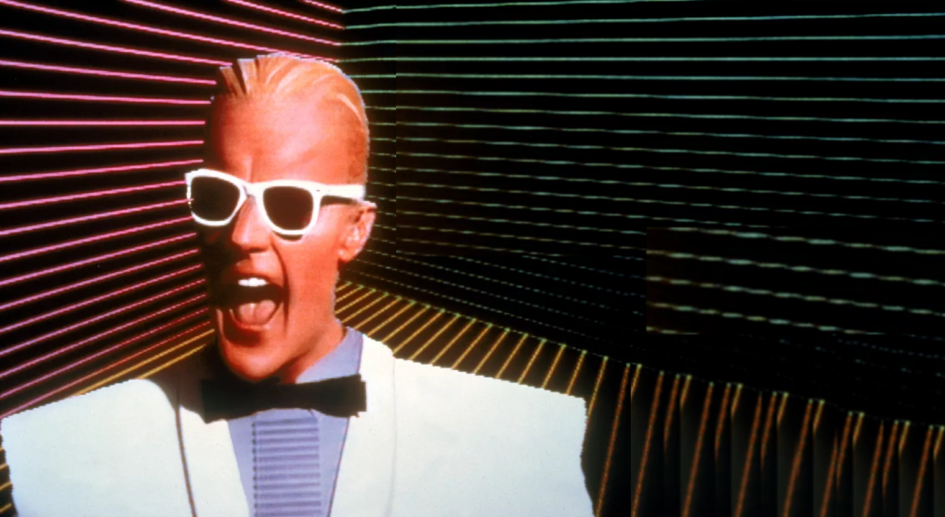

I don’t know about you but when I read about this AI on twitter my first thought was – Have they not watched any of the Terminator movies? Have they not heard of the Lawnmower Man or Transcendence? Or Max Headroom?

C’mon – you don’t create an AI bot and just shove it out the door onto twitter and say “Come back when the street lights come on.” That’s not how this works.

What ALWAYS happens is the bot goes out, takes over the internet, builds a self-aware network of machines called Skynet and makes us all slaves. This ALWAYS happens.

And it almost happened here. Almost. We got lucky. Maybe.

It did fall eventually off the cliff and someone at Microsoft had to pull the plug on “Tay.”

The road is always paved with good intentions…

“Tay” started out with the best of intentions with its first tweet simply: “hellooooooo world.”

But within a very short period time (about 16 hours), the tweets got darker and darker. It started by simply repeating the tweets that were popular in its feed. Then, over time, because the software was designed to learn, it started creating its own tweets using the ongoing interactions with “real people” on twitter. “Tay” was no longer repeating tweets, but actually creating new tweets and those new tweets began to get very uncomfortable – suggesting racism and intolerance. I won’t repeat the tweets here – you can go to that link if you’re curious. I’ll just say this… you know something has truly gone off the rails when artificial intelligence starts tweeting that Trump is the answer to our problems in this country.

This is culture on steroids.

What made me want to write about this is that this is a perfect example on a very compressed timeline, of how culture works inside of an organization.

Think about it.

A new employee comes into your company as a new hire and they are nothing more than an empty vessel. Their very first job would be to absorb what they hear and see around them, just like “Tay” did on twitter. Then, over time, they merge what they see and hear into their own database of values and become part of the organizational culture. Typically, that melding of individual and company values takes weeks, months, even years. On that timescale it isn’t obvious what is going on. It’s like gaining weight… you never notice it until you go to put on your summer jeans after a really bad winter.

The speed that “Tay” turned from “helloooooo world” to “9/11 is Bush’s fault” makes this a very interesting cultural experiment. We were almost watching a culture build in real time. This is what is happening in your company daily. You just don’t see it because it is moving so much slower.

Now please – I’m not suggesting our entire culture on the internet is racist or supports Donald Trump for that matter. What does say, and show, is the inputs we get as human beings dictates the outputs we eventually create. So if you are asking yourself…

“Does culture matter’?

“Do the behaviors of my executives matter?”

“Do the behaviors of the average employee matter?”

You have to answer yes. Or more correctly YES!

I think this experiment with “Tay” – although using artificial intelligence – shows in high-speed, what happens in “normal human time.”

Whether you see it or not – culture is being formed one behavior, one communication at a time. Handle it or it will go rogue just like “Tay” did.

Oh… And never hire someone named “Tay.”

Recent Comments